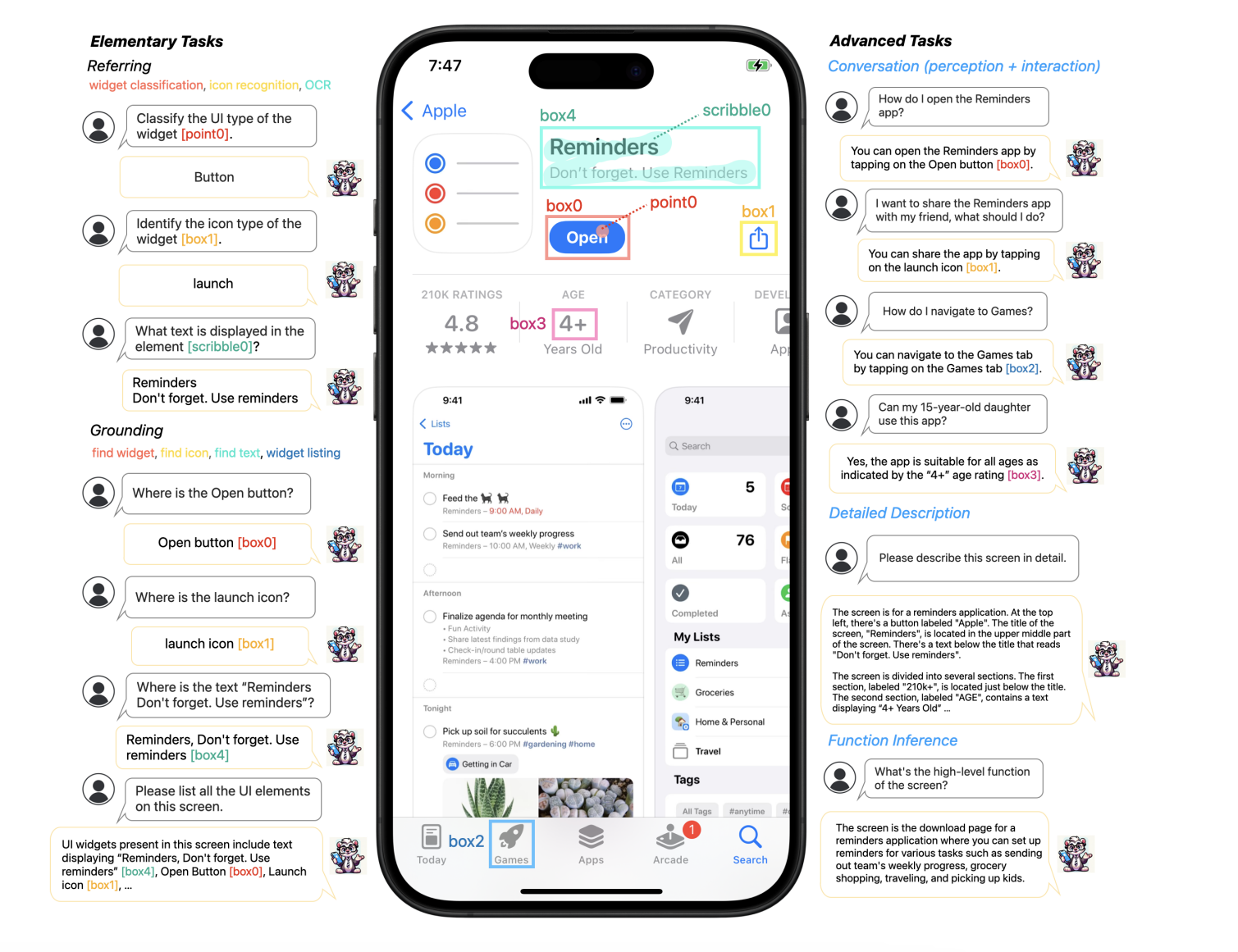

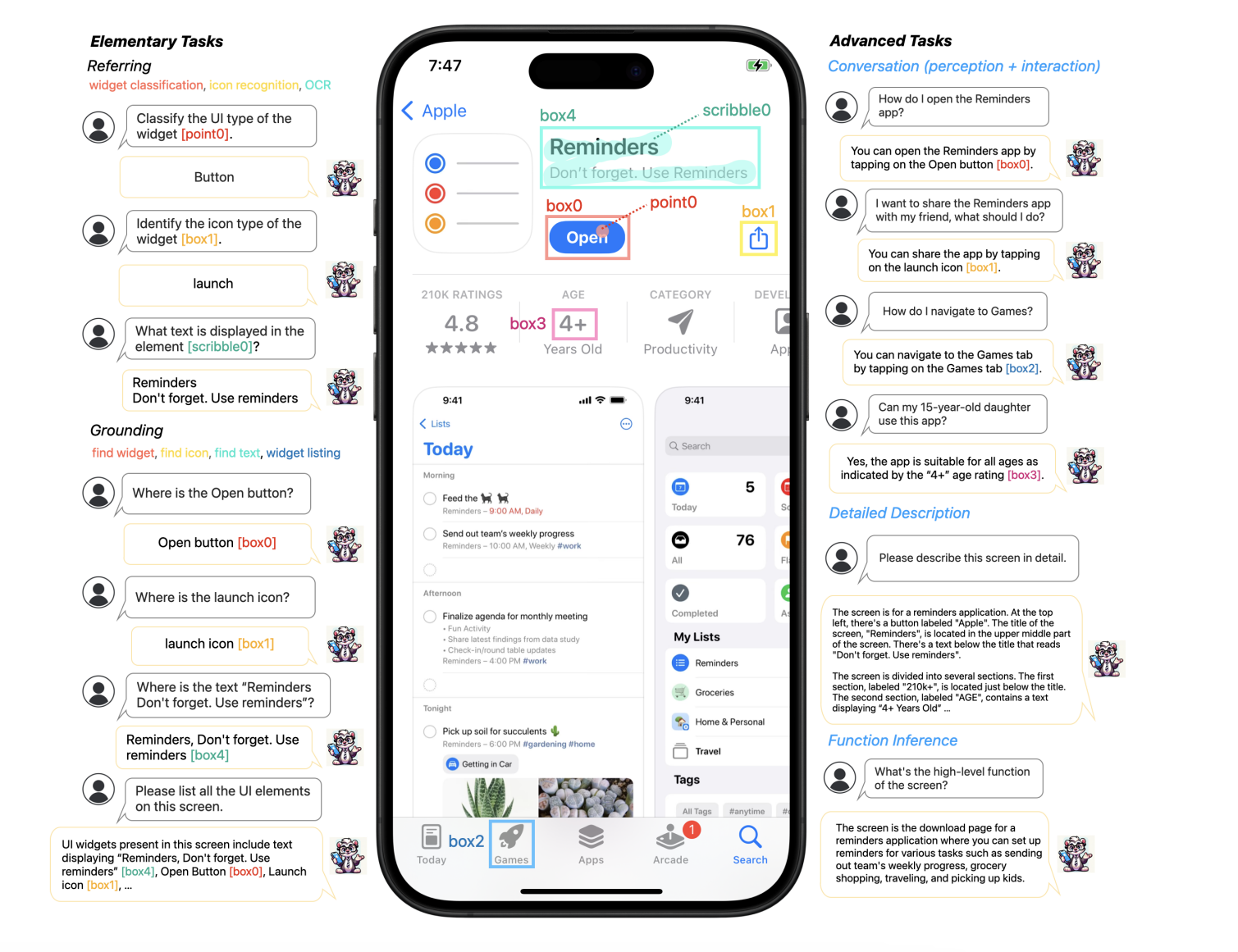

Ferret-UI is a brand new MLLM tailor-made for enhanced understanding of cell UI screens, geared up with referring, grounding, and reasoning capabilities.

Ferret-UI in motion, analyzing the show of an iPhone (Picture Credit score–Apple)

To deal with this, Ferret-UI introduces a magnification function that enhances the readability of display screen components by upscaling photographs to any desired decision. This functionality is a game-changer for AI’s interplay with cell interfaces.

As per the paper, Ferret-UI stands out in recognizing and categorizing widgets, icons, and textual content on cell screens. It helps varied enter strategies like pointing, boxing, or scribbling. By doing these duties, the mannequin will get a very good grasp of visible and spatial information, which helps it inform aside totally different UI components with precision.

What units Ferret-UI aside is its means to work immediately with uncooked display screen pixel information, eliminating the necessity for exterior detection instruments or display screen view information. This strategy considerably enhances single-screen interactions and opens up potentialities for brand spanking new functions, similar to bettering gadget accessibility. The analysis paper touts Ferret-UI’s proficiency in executing duties associated to identification, location, and reasoning. This breakthrough means that superior AI fashions like Ferret-UI might revolutionize UI interplay, providing extra intuitive and environment friendly person experiences.

What if Ferret-UI will get built-in into Siri?

Whereas it isn’t confirmed whether or not Ferret-UI shall be built-in into Siri or different Apple companies, the potential advantages are intriguing. Ferret-UI, by enhancing the understanding of cell UIs by way of a multimodal strategy, might considerably enhance voice assistants like Siri in a number of methods.

This might imply Siri will get higher at understanding what customers need to do inside apps, perhaps even tackling extra sophisticated duties. Plus, it might assist Siri grasp the context of queries higher by contemplating what’s on the display screen. In the end, this might make utilizing Siri a smoother expertise, letting it deal with actions like navigating by way of apps or understanding what is occurring visually.